A persistent hum that haunts certain geographic locations continues to captivate researchers and torment listeners. First documented in Bristol during the mid-1970s, this mysterious low-frequency phenomenon—known simply as ‘the Hum’—has since been reported worldwide, from Taos, New Mexico, to Windsor, Ontario. Roughly four percent of the world's population claims to hear it: a droning, vibrating sound that seems to pulse just at the threshold of human hearing, often described as resembling an idling diesel engine that never moves away. The Hum remains unexplained, spawning theories ranging from industrial machinery and underground gas pipelines to more imaginative causes, such as electromagnetic interference from military communications systems or even the mating calls of fish resonating through water and hull.

What makes the Hum particularly unsettling is its capacity to drive those who hear it to distraction. Many in that four percent describe sleepless nights, headaches, and an overwhelming sense of isolation, especially when family members and neighbors are oblivious to the sound. This has enshrined the Hum with its own community of believers, united by a shared perception of an invisible threat. Online forums dedicated to the Hum share the same conspiratorial energy found in discussions of government surveillance and electromagnetic weapons. It’s a familiar 21st-century story, where empirical investigation has given way to paranoid speculation.

Enter Jamie Hamilton, a composer interested in the interplay between sound, technology, and interpretation. Hamilton's work collects differing fragments to tell abstract stories about technology and belief, often through the integration of multiple practitioners. Hamilton has collaborated with luminaries including Elaine Mitchener, Lucy Railton, and Goldsmiths Prize winner Luke Williams, developing what he describes as a "bricolage" method informed through sound, text, and multimedia. His music has been broadcast on BBC Radio 3 and performed in venues ranging from abandoned grain silos to concert halls.

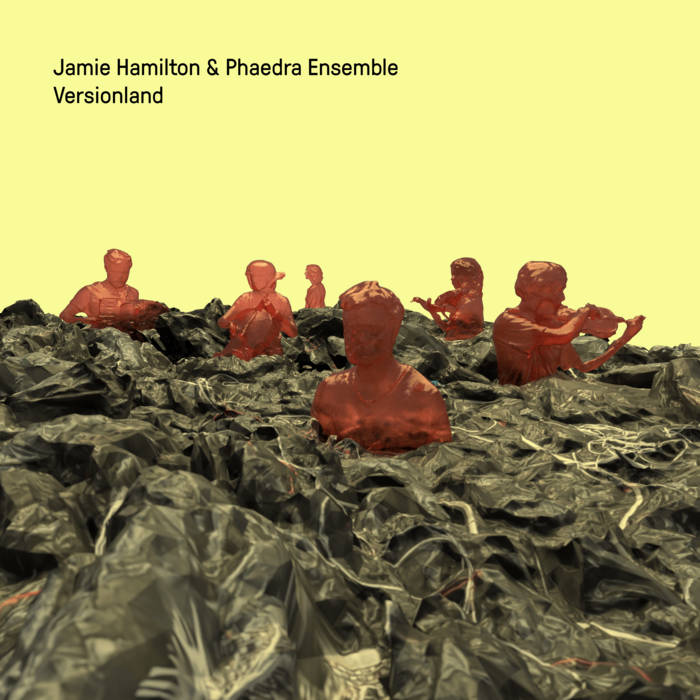

His recent album, Versionland, created in collaboration with London's Phaedra Ensemble, transforms the Hum from an individual experience into "a malign force destroying the world." Hamilton pored through pages of internet discussions, conspiracy theories, and obscure sound experiments and fed this material through early neural network technology. The recordings were then reconstructed into dataset facsimiles, creating bizarre instrumental and vocal passages that splinter with each computerized iteration. The resulting album becomes what the press materials describe as "a darkly humorous fever-dream on paranoia and internet-age mythology."

In this interview, we discuss Hamilton's background as a classically trained composer who has moved toward interdisciplinary collaboration, his fascination with the Hum as both an acoustic phenomenon and a modern paranoia, and his use of AI technology during what he calls the "intermediate slop period" between its glitch-aesthetics and more sophisticated future applications. We also explore his long-standing relationship with Phaedra Ensemble, the challenges of integrating AI-generated texts with live performance, and the broader implications of using technology to investigate the very anxieties that technology itself has created.

Michael Donaldson: I'm interested in your background and previous projects. Could you share a little about your history and how you've developed your current practice?

Jamie Hamilton: I'm a classically trained composer, but have gravitated toward an interdisciplinary way of working. My work is often text-driven, and I'm interested in how meaning and language can be altered through music-making processes, usually involving a formalistic approach to structure. I often work with writers and other types of artists, and these collaborations have influenced my work away from a strictly score-based practice, toward what you might call bricolage: a type of making through combining, weaving, and layering. It's music that is often an assemblage of things.

Michael: Beyond the composing world, were you always interested in unusual phenomena like the Hum? Have you experienced it?

Jamie: I've always been a collector of strange audio and perceptual phenomena, and the Hum occasionally cropped up in books I was reading. I think it's of interest to a lot of people who are somehow attached to sound art, as it's almost a form of Deep Listening or Cagean ideas gone sinister.

My interest in the Hum solidified after working on a project with schizophrenic hospital patients about their aural hallucinations. There was a specific patient deeply plagued by the Hum, and through working with him, I realized how much this encapsulated a particular type of modern paranoia.

Since the album came out, I've had quite a few people, including long-term colleagues, approach me to tell me that they are 'Hum hearers.' Most of them have tinnitus, but they describe it as a clearly separate sound from their tinnitus, though also somehow tangibly related.

Michael: There certainly is something about collective paranoia or shared hallucination that serves as a metaphor for our current era.

Jamie: The parallel between the individual and collective hallucination is what drew me toward the Hum in the first place. It feels very much a distillation of our current moment, and audio's transitory nature is a perfect contested area where all sorts of complications of meaning can occur. I was interested in a piece that gradually loses control of itself and expands; I was into something messy that might not fully make sense, and where we have these gradually breaking down frames of reference, where the Hum can essentially be a stand-in for anything malign or unexplained. It's hyperbolic and quite daft as well—there's a melodrama that should be seen as a little bit tongue in cheek, even at times a bit blunt and stupid. There are a lot of literary parallels with these sorts of ideas, like Don DeLillo's White Noise or Tom McCarthy's Remainder. I suppose at times I'm trying to undercut the seriousness of it all and intentionally subvert these quite well-worn postmodern tropes.

Michael: Tell me about your relationship with Phaedra Ensemble. What do they bring out of you as an artist?

Jamie: I've worked with Phaedra for years now and am co-leader of the ensemble. It began as a fairly casual collaboration with the founder and artistic director, Phillip Granell, who is also an accomplished violinist. Gradually, I've developed a very collaborative process with Phaedra, and most of my music is made with them in mind. I've been fortunate that I've been able to develop a type of shared practice and collection of techniques and approaches. There's a lot of mutual trust there, and it's getting closer to how a band or theater group might work, where I direct the performers as much as I strictly compose for them.

Michael: In addition to their expected performances as instrumentalists, you relied on the ensemble members to speak the text sources. What was it about that choice?

Jamie: It's difficult when you take highly trained classical instrumentalists and ask them to disregard their decades of instrumental practice and use their largely untrained voices. It was also important to me that I had players who were comfortable with their voices. In a sense, it's a meeting in the middle—the musicians are always laudably open-minded, but I'm also trying to build and incorporate text in a way that somehow relates to their chamber music playing. This feels like the only way to avoid bad acting.

Michael: Where did you find your text sources? And why not just sample them?

Jamie: I started by downloading an entire archived Yahoo! mailing list about the Hum, most of which was from the 1990s. With these early AI models, your output hovers much closer to the boundaries of gibberish, so the texts are heavily edited and at times totally rewritten by me.

It was important to me not to use samples; I found the interesting aspect here was getting human reinterpretations of these very "non-human" texts. That's often been an interest of mine, and it shadows some of my interests in artists like Ryan Trecartin, Dadaist sound poetry, or constraint-based writing, such as Oulipo. I think there's still a lot of mileage to be explored in that.

Michael: That's fascinating. Is it relatively easy, then, to explain how you used neural networks on these texts and applied the outputs to Versionland?

Jamie: I use AI and neural network processes in quite a few different forms throughout Versionland. The most significant of these was training an early version of OpenAI's GPT-2 on the contents of the Yahoo! mailing list, which generated the texts that underpin the work—in that sense, the work is quite a conventional 'text setting.' I used some fairly crude audio AIs, which I'd sometimes transcribe and iterate with the ensemble, and create feedback processes of AI generation → ensemble interpretation → AI training → AI generation, and so on.

I also used a lot of machine listening. This is an AI-assisted process in which you analyze a corpus of audio, in this case, mostly collective improvisations I created with the Phaedra Ensemble. The computer can then 'listen' to other audio coming in and match it in various clever ways to your corpus. This is what I call 'playing sounds with other sounds.' It's a big part of the sound design of the work.

The bulk of this project was completed a couple of years ago, so AI technology was much more basic and genuinely weird than it is now, and there was a lot of disturbing garbage to edit through. I suppose it's similar to the glitch aesthetic in 1990s digital art, where technological failure can lead to interesting results—more 'ghost in the machine' than passing the Turing Test. The algorithms were messy and had weird outputs—half the text I'd generate would be the AI stuck in a loop repeating a single sentence for twenty pages, or sound files consisting solely of noise.

Although it probably forces you down certain cul-de-sacs or clichés, it occasionally gives you sounds and sentences that have never been heard before, which is pretty different from the consumer-focused shiny AI we use now.

It feels like the possibilities with these crude tools were in some ways greater, and we are in a fairly boring intermediate slop period between glitch-psych-AI and some possible future where we can actually collaborate with this technology.

Michael: How does this process relate to the Hum?

Jamie: I think there are conceptual tie-ins with the Hum: these ideas of computer hallucinations and sounds that do not exist. It is also a way of trying to use some form of data or information navigation as an aesthetic, which I think comes across at points in the album.

Michael: I'd like to hone in on your description of this 'intermediate slop period' between 'glitch-psych-AI' and collaborative AI technology. Could you elaborate on what you envision that future collaboration might look like?

Jamie: I'd say that Versionland's use of AI is quite out of date, and tools like Suno now write convincing, if bland, music. Versionland's use of AI is much more hallucination-based—parts of it are using the OpenAI Jukebox technology that was behind some of those first viral clips, where Frank Sinatra would sing Michael Jackson. It was a much more 'squint and you can see it' technology, which I liked. It had a bit of Brechtian alienation to it, where the process was an audible layer, and it was truly weird, and often fairly shit. It's very liminal, hovering between two things in awkward but fascinating ways. No one would think Johnny Cash singing Usher was real, but it traces around the edges of how they sound in nice ways.

I have no idea what collaboration will look like in the future. A lot of the artists working in these fields have really bought into the tech boosterism, fill their boots with whatever the current trend is—hello NFTs!—and speak Silicon Valley language. So I'm neither optimistic nor pessimistic. I was struck by a recent study showing that people actually prefer genuinely terrible AI-generated poetry. The hypothesis was that actual poetry is complicated and challenging, and is fairly hard work, and maybe quite genuinely not for everyone; you need a pretty strong grounding in poetry to fully appreciate it. I don't think I fully can. So I'd hope that people still find interesting ways to break things.

Michael: You've produced two videos for this project, "Test Tubes" and "Strange Song." They're great! Can you tell me about those?

Jamie: Versionland was initially an extended video work lasting over ninety minutes, which was part of the deluge of digital and moving image works that were necessitated by the live music shutdown during the pandemic. The album is essentially a distillation and remix of this longer video work.

For "Test Tubes," I made a 3D scan of the percussionist and actor Christopher Preece and animated him using Unreal Engine. That's a video game piece of software whose aesthetic floats within an appealingly hyperreal and uncanny valley area. The lighting in the video mirrors the process in the track, which is an audio recording of Chris whispering very rapidly, which I accelerate tape-style and gradually slow down throughout the track.

"Strange Song" was made firstly as a straight piece of music, which I then split into about thirty different audio tracks all playing on tiny speakers. I disconnected these speakers and made a very messy environment of plastic, amps, wires, lights, and speakers, and got Chris Preece and Richard Jones (viola) to crawl around the space, gradually wiring the speakers into the amplifiers—in a sense, another form of 'playing sounds with other sounds.' In this way, they gradually 'built' the song. They had some instructions to sing in various relationships to the resulting audio, which was eventually mixed back into the final track. Looking at the video footage of them crawling, I was struck by their animalistic, swamp monster-like appearance. The video just very intuitively came from a desire to heighten and play with this.

Michael: Would you like to hear the Hum? Would you keep working with the concept if you did?

Jamie: I'm in the process of moving to Bristol, which has the most famous Hum in the UK, and I'm working there already fairly regularly, so it could happen there. My girlfriend was looking at a house the other day, but said the area it was in had an oppressive, low, droning sound, which I suppose means she is a Hum hearer. However, I think I'm more interested in a type of theatrical or literary extrapolation of these themes and phenomena, as opposed to working directly with them. I like the idea that you never really hear the Hum in the piece—there are no field recordings, and it's largely not even directly talked about. I would like to attempt a re-orchestration of the piece to an ensemble of only low instruments: double basses, low brass, contrabass clarinets, etc. So maybe there'd be space for a more field recording-based interpretation inside that?

Check out more like this:

The TonearmChaz Underriner

The TonearmChaz Underriner

The TonearmLawrence Peryer

The TonearmLawrence Peryer

Comments